Ask an Expert: So just what is a 'killer robot'?

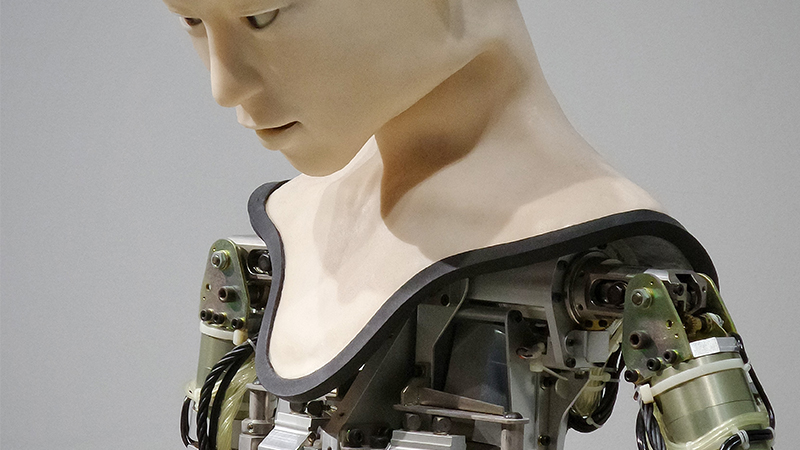

Chances are you have heard of the term 'killer robot', but what exactly are they and are they inevitable or do they already exist? We sat down with UNSW Canberra academic Austin Wyatt to find out more.